Gigabyte-accurate billing – charge-back to your own customers – this requires a stable and secure solution. The evaluation should be as granular as possible, but in the end, for example, billing takes place at the end of the month. NetApp ONTAP provides the necessary tools directly: Quotas are the keyword. It is possible to display the data both via CLI and via the Rest API.

Especially in the cloud environment, such issues need to be automated. AWS offers the ideal platform for precisely such tasks.

Requirements

In order to be able to use the following script at all, quotas must be activated on FSxN (and all other ONTAP systems). This can be done via the CLI or via the Rest API. The article itself only shows the way via the CLI.

quota policy create -vserver <svm> -policy-name charge-back

quota on -vserver <svm> -volume <volume>After the preparations for the quota have been made, it must be activated on each volume. Ideally, this is solved via automation or you can repeat the following command for each volume:

quota policy rule create -vserver <svm> -policy-name charge-back -volume <volume> -type tree -target "" -threshold 85Note: The configured quota only shows the memory used and the number of files in the volume/Qtree. If you really want to limit the maximum size here, the parameter -disk-limit or -soft-disk-limit must be set!

The quota settings can be verified with quota report -vserver <svm> -volume <volume>

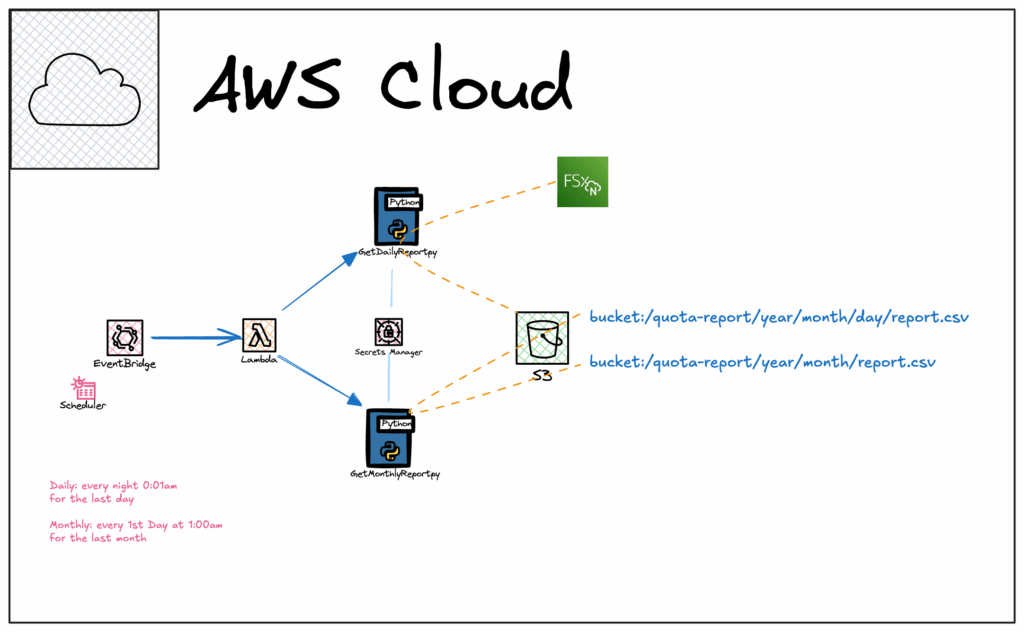

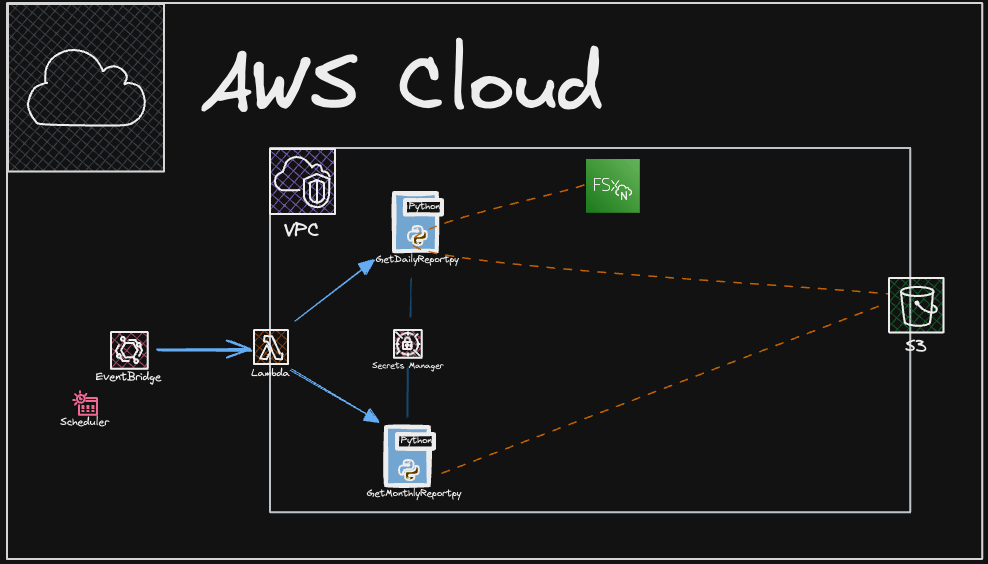

Architecture

The following components are used for the automated quota reports:

- AWS EventBridge (former AWS CloudWatch Events)

- AWS Lambda

- AWS Secret Manager

- AWS S3

AWS EventBridge uses the scheduler to create the reports. This defines when the reports are created. The runs must always be executed at the same time. Two schedules are created in EventBridge for the quota report creation: Daily and Monthly

Both schedules call a Lambda function that collects the quota reports and writes them to an S3 bucket.

Two Lambda functions are required for these reports, namely one for the daily and one for the monthly reports. Both functions are written in Python and can be found on my GitHub repo.

The Process

There is the Daily and the Monthly. Both scripts are available in two different versions. The scripts with _v2 are for the Lambda functions; the other two can be used to execute them from a Linux host.

But what does the process look like? The EventBridge starts the GenerateDailyQuotaReport_v2 every day and this runs through various steps:

- Querying the credentials for the FSxN systems from the Secret Manager

- Reading out the FSxN systems in the VPC

- Connection to the FSxN Rest API via Management IP

- Reading the quota information

- Conversion to CSV and JSON for CloudWatch

- Upload to S3

Note: It is important to know that the output represents the data of the previous day. For this reason, the scheduler should be set to 0:01am.

The GenerateMonthlyQuotaReport_v2 script

- Connect to the bucket and reads all CSVs in the Daily folder

- Consolidating all into a monthly report

- Upload to S3

Eventbridge + Lambda configuration

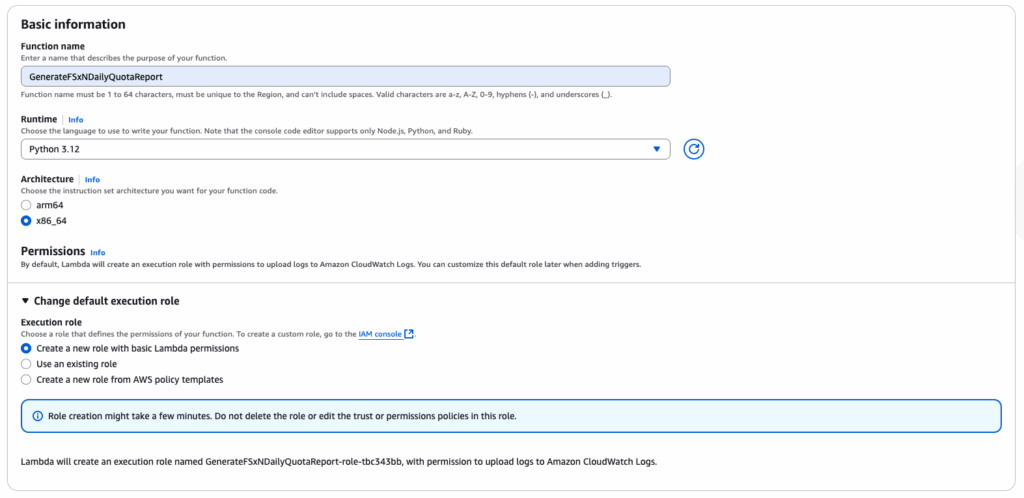

Let’s see, how it will be configured: In AWS Console -> Lambda -> Create function -> Author from scratch

Open Additional configurations, enable VPC, and select your VPCs and subnets where the FSxN Systems are located. Apply a Security Group that allows HTTPS to the FSxN Systems. If you need more options, feel free to select. Then press “create function“.

Apply this additional policy to the generated Role

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AdditionalPolicies",

"Effect": "Allow",

"Action": [

"fsx:DescribeFileSystems",

"s3:GetObject",

"s3:PutObject",

"secretsmanager:GetSecretValue",

"secretsmanager:DescribeSecret"

],

"Resource": "*"

}

]

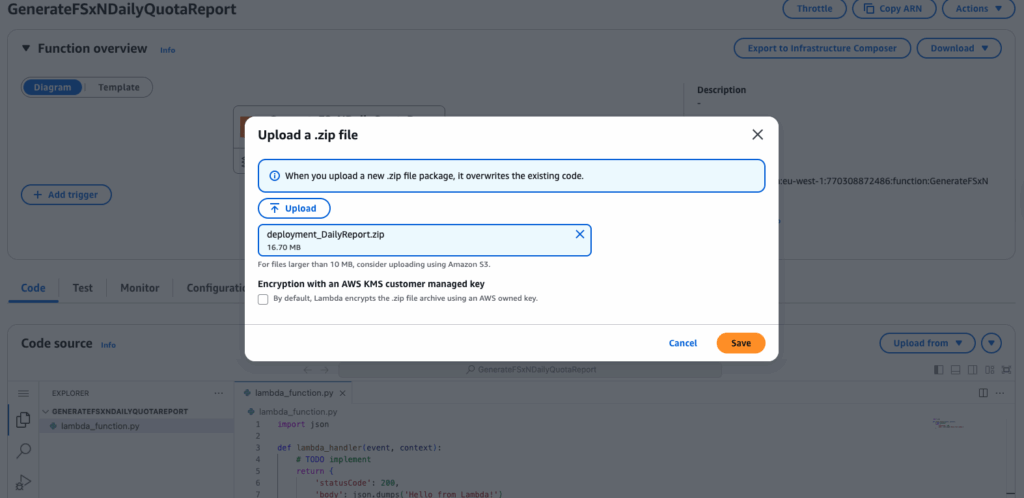

}Generate the deployment package file by running the GenerateDeploymentPackage.sh, which is available in the GitHub repo.

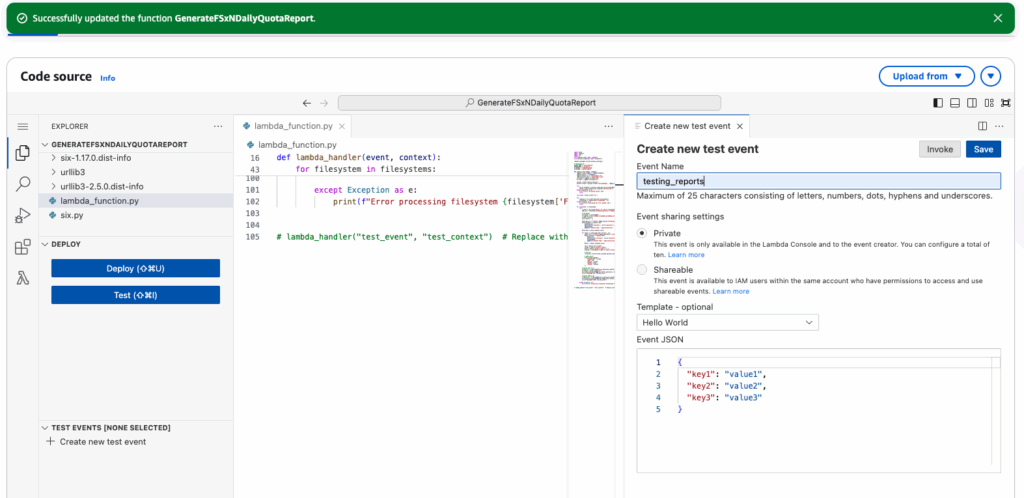

Upload the package file to the Lambda function.

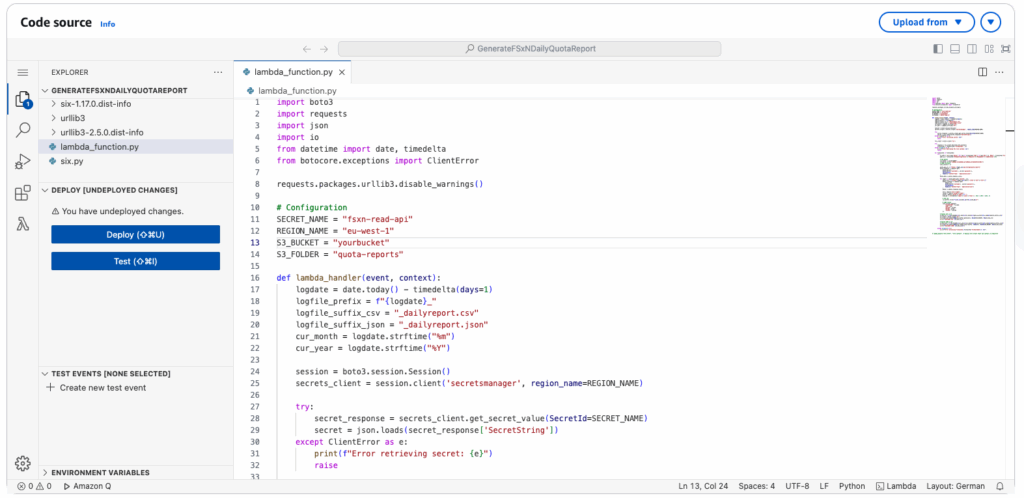

Change the variables in the configuration part in the script and press “deploy” to activate the new code!

After successful deployment the function can be tested.

It can be default, because the event values have no impact to the function at the moment.

Change some configuration settings in the lambda functions:

- Timeouts from 3s to 60s. It’s depending on how many FSxN Systems and Volumes/qtrees

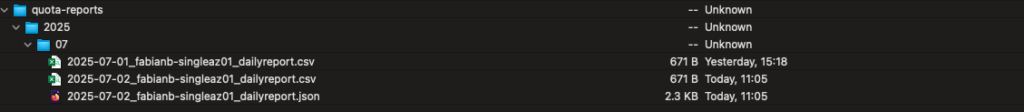

Now the function can be tested. The result will show a folder structure like this in the bucket:

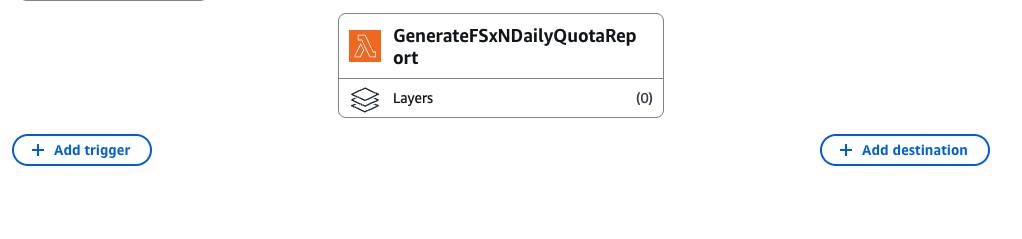

Plan the function

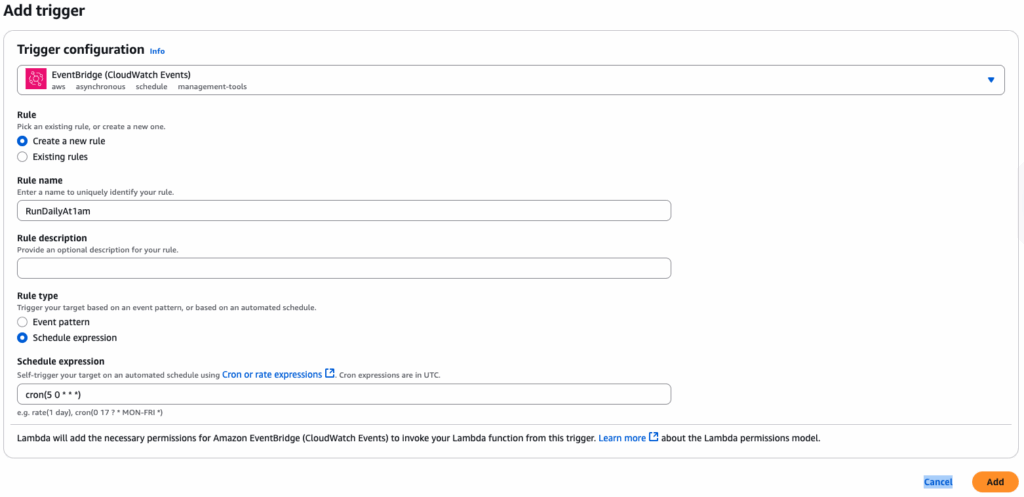

Damit nun der Report täglich ausgeführt wird muss der Funktion noch ein Trigger hinzugefügt werden:

- Type: EventBridge

- RuleName: i.e. RunDailyAt1h5am

- schedule expression: i.e. cron(5 1 * * ? *)

done.

Next post will be about the Monthly report! Stay tuned!

No responses yet